Self Flying Mount in World of Warcraft With Neural Nets

Forewarning: using a third party program to control a character is strictly against Blizzard's Terms of Service.

When I was 14 years old, I spent countless hours flying through Icecrown Citadel collecting Titanium Ore. I used the routes addon to create the most efficient routes to maximize the amount of gold I could earn per hour. Even though I was getting my dopamine hits, it was a mundane task - it felt like a chore. If only there was a better way...

A few years ago, I came across a youtuber named Sentdex. He had a video series on creating a self driving car in GTAV. I wondered if I could apply the same concept to teach a World of Warcraft (wow) character to follow the same route I used to follow all those years ago. Only one way to find out!

Long story short, it turns out to be possible! This video demonstrates it in action.

What you just saw is a neural network taking images as input, and predicting three possible outputs, or actions. I used a technique called supervised learning to train the model on a classification task. It consisted of three parts - gathering data, training the model and controlling the character.

Gathering Data

In order to train the model, I needed a lot of labeled data, mapping inputs to outputs. To get this data, I controlled the character myself doing what I wanted the network to learn to do - follow the route.

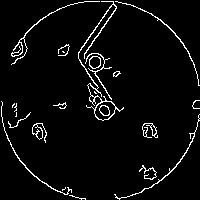

Each input is a 200x200 image captured from a fixed region of the screen, the location of minimap in the user interface. Each image is preprocessed with a gaussian blur and edge detection. The theory is that preprocessing the image will reduce the noise, making it easier for the network to detect features. This is an example of the raw image versus the preprocessed image.

Each output is a multi-hot encoded vector representing multiple simultaneous actions. This is a fancy way of saying an array of N integers for N possible actions. Multiple integers could be 1 because in this use case, multiple keys can be pressed. Examples:

[1,0,1] -> "left", and "w" pressed and "right" was not.

[0,1,1] -> "right" and "w" pressed and "left" was not

[0,0,0] -> no keys pressed

[1,1,1] -> all keys pressed

I must call out, to keep it simple, I broke a cardinal sin of wow. I was training the neural network to be a keyboard turning noob! My 14 year old self would be ashamed.

For the training step, I need to record the input and output data. I used a loop on a counter to add captured images and keypresses to a buffer. Every 100 frames (10 seconds), I would dump the buffer into a numpy zip file and save to disk.

Now I just need to follow the route, controlling my character with "left", "right" and "w". Luckily I have a level 70 character from when I played classic wow a few years ago. As you can image, this was even more mundane than when I was 14, especially having to keyboard turn while gathering the valuable data.

I gathered data following a route in Hellfire Peninsula for 30 minutes. This gave me 18000 data points to work with.

Training the Model

The model is a basic convolutional neural network (CNN) written with Pytorch. It consists of some convolutional layers for feature extraction followed by some fully connected layers for classification.

Following the route consists of holding down the "w" key to fly forward with some some left and right turns sprinkled in between. In fact, of the 18000 data points, "w" was pressed in 17992 of them (99.95%). There were only 1000 "left" and 1021 "right" (~5.5%). For this reason, it is crucial to balance the data. I used a WeightedRandomSampler to ensure that the model would see a more balanced distribution of actions during training.

Some of the key model designs were to prevent overfitting as much as possible. I used dropout layers after each fully connected layer, with higher dropout rates (0.7) to force the model to learn more robust features. The architecture uses three convolutional layers with ReLU activations and max pooling, followed by three fully connected layers.

Conv2D(1, 32) -> ReLU -> MaxPool2D -> Conv2D(32, 64) -> ReLU -> MaxPool2D -> Conv2D(64, 128) -> ReLU -> MaxPool2D -> Flatten -> Linear -> ReLU -> Dropout(0.7) -> Linear -> ReLU -> Dropout(0.7) -> Linear -> ReLU -> Dropout(0.7) -> Linear(to 3 outputs)

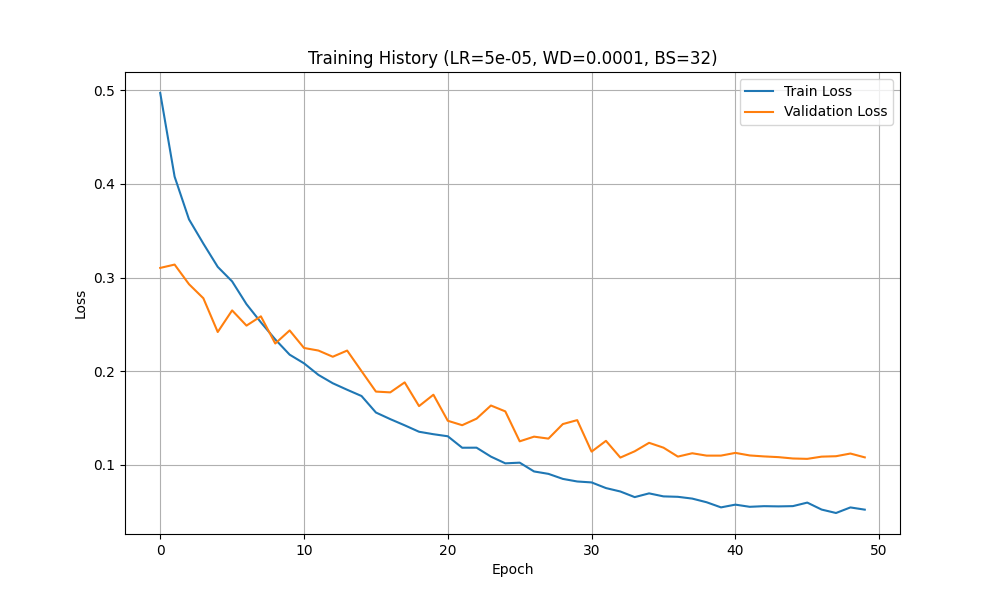

I used binary cross-entropy loss since each action (left, right, forward) can be pressed independently or together. Adam optimizer with learning rate 1e-4 and weight decay 5e-4 helped prevent overfitting. I also implemented a learning rate scheduler that reduced the learning rate when validation loss plateaued.

After training for 15 epochs with a batch size of 64, the model achieved a validation loss of around 0.15.

Controlling the Character

The most exciting part was putting the model to the test. I created an inference script that:

Loads the trained model

Captures the minimap in real-time

Processes the image the same way it was processed during training

Feeds the processed image to the model

Takes the model's predictions and translates them to keyboard actions

The prediction happens in real-time, approximately 10 times per second. For each frame, the model outputs three values between 0 and 1, representing the confidence that each key (left, right, w) should be pressed.

I implemented a threshold-based approach where:

If confidence for "left" > 0.4, press the left arrow key

If confidence for "right" > 0.4, press the right arrow key

If confidence for "w" > 0.9, press the w key

Notice the higher threshold for "w" - this was necessary because the model was initially biased toward pressing "w" all the time. This makes sense considering that 99.95% of the training data contained "w" keypresses!

The keyboard actions are sent using the pynputlibrary, which simulates actual keypresses. To avoid constantly pressing and releasing keys, I implemented a state tracking system that only sends keyboard events when the desired state changes.

Results and Observations

The results were better than I expected! The model successfully navigated the character around the route I had trained it on. There were a few spots where it would get confused, especially in areas with complex terrain.

Some interesting observations:

Generalization: The sample video was showing the character flying in Terokkar Forest. But all the training was done in Hellfire Peninsula, suggesting it learned general navigation principles rather than memorizing exact pixel patterns.

Failure points: Routes with extremely sharp turns often disoriented the character causing it to turn around and go the opposite direction.

Hyperparameters: I played around with a lot of the hyperparameters to try to get the lowest validation loss possible. I think the lowest I saw was around 10 or 11%.

It was hard to quantify how much of a real world difference each model performed. I mixed and matched many hyperparameters but didn't have a good way of capturing which changes where most impactful. These included

dropout rates

learning rate

number of epochs

weight decay

number of convolution layers

kernel sizes

number of parameters in each layer

Confidence threshold for a keystroke

I noticed early on that the model seemed to be much more effective by selecting "Rotate Minimap" in the wow game interface menu. This means the minimap rotates instead of the player arrow, so the direction of the character is always 12 o'clock instead of North being 12 o'clock. My hypothesis is that it was much easier to learn the pattern that the route line should be fixed up and down.

Future Improvements

While this project was successful as a proof of concept, there are several limitations:

Input data: The model only sees the minimap, not the actual game world, which limits its understanding of 3D obstacles. I wonder if I could get better results by capturing images of the character flying through the world in addition to the minimap.

Action space: I only implemented basic navigation (left, right, forward). There are some areas where I would have to press the space bar to cause the character to increase altitude to avoid mountainous regions. This is related to capturing 3D input data because the minimap provided no sense of the Z index. It also is redundant to even try to predict "w" keypress action, because the character should be flying forward the entire time.

Mining Nodes: the model doesn't actually do anything if it sees nodes as they appear on the minimap as yellow circles. I could either train another model to recognize the node and do some action such as stopping flight to alert me to fly down and grab the ore. I could also use an existing addon such as minimap-alert.

Despite all these interesting approaches to take, I think getting more training data in more diverse zones would be highly effective at improving the results of what I already have.

Conclusion

This project was a nostalgic and rewarding way to apply machine learning to something from my childhood. While I have no intention of actually using it in-game (see ToS note above), it was fascinating to see how a relatively simple neural network could automate a once-mundane task.

If you ever find yourself doing repetitive tasks in a game, maybe that’s your cue to turn it into a learning experience.

👉 Check out the code